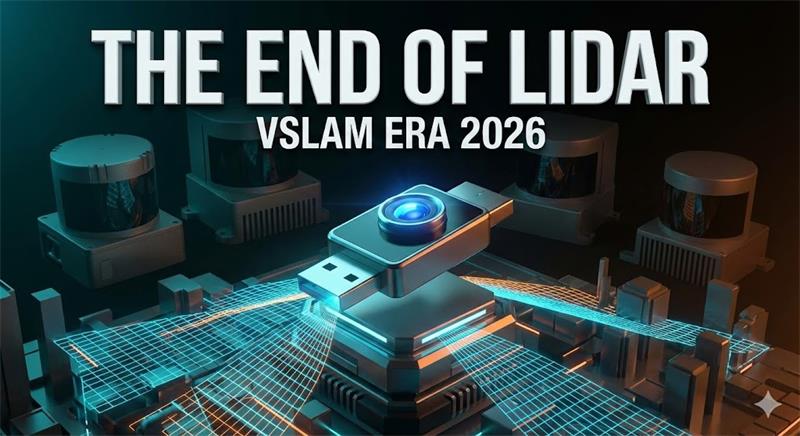

This industry analysis reviews the architectural shift from LiDAR to "Vision-First" VSLAM observed at CES 2026, led by Tesla and Segway. It defines Global Shutter USB 3.0 modules as the critical hardware requirement for eliminating motion blur in cost-effective autonomous mobile robots (AMRs).

Jan 14th, 2026 shenzhen china, source: shenzhen novel electronics limited

CES 2026 Deep Dive: The "LiDAR-Free" Era Has Arrived. Is Your Vision Stack Ready for the Pivot?

LAS VEGAS, Jan 6th, 2026 — If CES 2026 made one thing clear, it is that the robotics industry is undergoing a fundamental architectural shift. The era of relying solely on expensive, heavy LiDAR arrays is fading. In its place, a new paradigm has emerged: "Vision-First" VSLAM (Visual Simultaneous Localization and Mapping).

For CEOs, CTOs, and Product Managers in the robotics sector, this shift presents both a massive opportunity to lower Bill of Materials (BOM) costs and a critical engineering challenge.

The Titans Have Spoken: Vision is King

Walking the floor at CES 2026, the trend was undeniable among the industry's most influential players:

The Insight: The market leaders are proving that cameras are not just "sensors"—they are the primary navigation instrument.

The Hidden Engineering Trap: The "Rolling Shutter" Effect

However, for mid-sized AMR (Autonomous Mobile Robot) manufacturers, blindly following this trend leads to a common pitfall. Many engineering teams attempt to replicate Tesla’s VSLAM success using standard, low-cost sensors, only to face a critical failure mode: Motion Blur.

Most commercial cameras use Rolling Shutter sensors, which scan images line-by-line. When a robot moves quickly—like a Keenon delivery bot or a Unitree quadruped—this scanning delay causes "Jello Effect" distortion.

For a VSLAM algorithm, this distortion is fatal. It causes the robot to lose its localization, drift off-path, or collide with obstacles.

The 2026 Standard: Global Shutter + Wide Angle

The solution emerging from the CES engineering backrooms is not more complex processing, but better raw data. The new "Gold Standard" for mass-production robotics combines three specific features:

The Future is "Simple & Sharp"

As we move into 2026 and 2027, the winners in the robotics space won't be the ones with the most expensive sensor suite. They will be the ones who can deploy "Tesla-grade" vision performance at a fraction of the cost.

The formula is clear: Drop the LiDAR, keep the USB, and switch to Global Shutter.