Summary: This 2026 engineering report analyzes the top 3 causes of AI signage deployment failure: fragmented Android HAL permissions, non-compliant USB drivers, and invisible physical obstructions. It argues that successful scaling requires a shift from consumer webcams to driverless UVC infrastructure to ensure visual observability and reduce truck rolls.

AI digital signage often fails not because of weak algorithms, but because deployment environments are fragmented, opaque, and operationally fragile.

As networks scale across mixed operating systems, legacy hardware, and security-controlled environments, issues such as driver conflicts, invisible screen failures, and unclear responsibility boundaries become the primary causes of system breakdown.

This article analyzes the real operational pain points exposed at ISE 2026 and outlines practical architectural principles for building AI signage systems that remain stable, observable, and maintainable at scale.

ISE 2026 showcased an impressive range of AI-powered digital signage solutions. From generative visuals and audience analytics to real-time content orchestration, the industry appeared confident that AI had finally matured.

Yet behind the polished demos and keynote messaging, a very different conversation was taking place—one centered on deployment anxiety, operational fatigue, and systems that break once they leave the exhibition floor.

This article is not a recap of products or announcements. Instead, it examines the structural failure points repeatedly exposed at ISE 2026—issues that directly influence player selection, system architecture, and long-term scalability. These are not problems marketing can hide, and they are increasingly shaping how digital signage systems are designed in 2026 and beyond.

One of the most repeated off-stage conversations at ISE 2026 was not about AI accuracy or display technology, but about platform fragmentation.

In real-world networks, it is now common to find Windows players, multiple generations of Android boxes, smart commercial displays with different SoCs, and dedicated signage players running side by side. Each device comes with its own browser engine, decoding limits, resolution caps, and update cadence. As long as content remains simple, the system appears stable. Once content becomes dynamic, data-driven, or AI-enhanced, the cracks begin to show.

Industry observers such as invidis accurately described this situation as “patchwork systems.” What looks like a unified network is, in reality, a collection of heterogeneous execution environments. Different operating systems, SoC generations, and Chromium versions coexist without a shared baseline.

This creates a paradox integrators know well:

the more devices a CMS claims to support, the harder the system becomes to scale.

Compatibility without standardization does not reduce risk—it multiplies it. Without a unified stack, operational complexity grows faster than network size. At scale, fragmentation becomes a structural barrier, not a technical inconvenience.

The USB Permission Trap (The "Touch" Paradox) Standard Android OS is designed for phones, requiring a user to tap "OK" when a USB camera is connected. In a headless digital signage player or a kiosk behind glass, there is no one to tap the screen. Some custom ROMs suppress this, but stock firmware often resets permissions on reboot, bricking your camera feed until a technician brings a mouse.

The Power Budget Limit (The 500mA Lie) Many cost-effective Android boxes (e.g., RK3566/Amlogic) share power rails across USB ports. A consumer 1080p webcam with autofocus motors and heavy ISP processing can easily spike above 500mA. Result: The voltage drops, the camera disconnects randomly, and the OS kills the app. This is often misdiagnosed as "software instability" when it is actually a brownout.

Fig 1. The fragmentation of Android HAL versions across different SoC generations often leads to inconsistent USB peripheral behavior

Another failure repeatedly highlighted at ISE 2026 is responsibility fragmentation.

When a screen goes black or content plays incorrectly, troubleshooting often follows a predictable pattern. The CMS vendor points to hardware. The hardware vendor blames the operating system or network. The operator receives complaints, while the system integrator has no immediate way to determine where the fault actually lies.

Several vendors acknowledged this openly at ISE. Some CMS platforms now promote fully integrated stacks—hardware, OS, software, and remote device management—not because modularity failed, but because customers no longer tolerate finger-pointing.

For operators, visual quality or feature richness has slipped behind a more basic requirement:

When something breaks, can someone take responsibility—and can the problem be identified quickly?

As networks scale across regions and countries, delayed fault isolation becomes prohibitively expensive. Systems that cannot clearly define accountability at the operational layer struggle to remain viable, regardless of their technical sophistication.

The CMS log says 'Camera Connected', but the Android OS has put the USB port into suspend mode to save power. The software is right, the hardware is right, but the system is broken

A retail chain deployed 500 kiosks with consumer webcams. An automatic Windows update changed the USB power management driver. The webcams went into 'sleep mode' and never woke up. The fix required a technician to visit every single store to unplug and replug the USB. Total loss: $150,000

Content innovation is accelerating faster than hardware ecosystems can adapt.

At ISE 2026, AI-generated visuals, real-time data applications, and complex web-based experiences were everywhere. These workloads assume modern browsers, capable GPUs, and updated decoding pipelines. Yet many deployed networks still rely on older SoCs, outdated Chromium versions, and players designed for static content.

The result is not gradual degradation, but unpredictable failure. Some screens stutter, others drop frames, and some fail completely. This inconsistency is more damaging than uniform limitations, because it undermines trust in the system.

The emergence of cloud-rendered and streamed content architectures reflects a growing industry acknowledgment: local execution environments can no longer be assumed to be reliable. When content complexity exceeds local capability, operators must either accelerate hardware refresh cycles or accept partial network failure.

Content innovation now routinely outpaces hardware reality. Platforms that cannot explain how older devices degrade gracefully—or how they are protected from new content demands—are increasingly difficult to justify as long-term infrastructure choices.

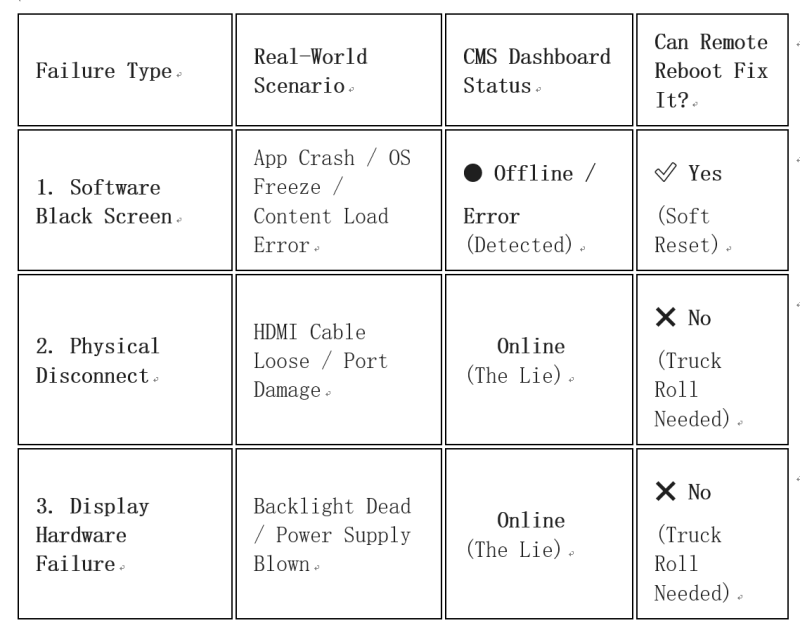

Many monitoring systems can report whether a device is online, whether a process is running, or whether CPU usage appears normal. What they cannot answer is far more critical:

What is actually happening on the screen?

At ISE 2026, integrators repeatedly admitted that some of their most expensive failures had nothing to do with software crashes. Loose HDMI cables, powered-off displays, blocked screens, or misconfigured brightness often appear invisible at the system level. From the dashboard, everything looks healthy. In reality, the screen is unreadable—or dark.

The only way to diagnose these issues is often a site visit. In large European networks, each truck roll carries substantial cost and operational disruption.

This reveals a fundamental gap in many architectures: a lack of visual observability. Logs and metrics describe system health, but they do not capture user reality. Without a reliable way to see what the audience actually sees, operators remain blind at the most critical layer.

At scale, the absence of a “remote eye” is not a feature gap—it is an operational failure mode.

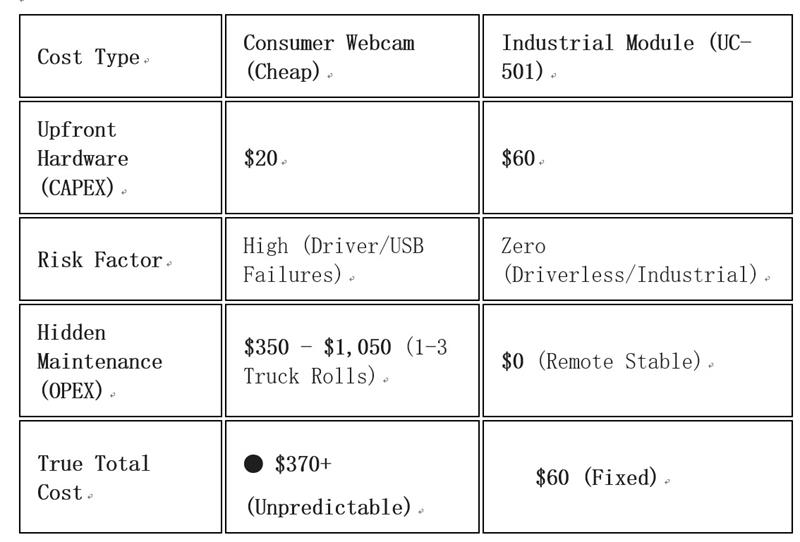

The Cost of Blindness Equation: 1 Truck Roll ($350) > 5 Years of Hardware Cost Difference. Buying a $20 webcam instead of a $60 industrial module saves $40 on CAPEX but risks $1,000+ in OPEX over 3 years.

The Verdict: Saving $40 on hardware today risks losing $1,000+ in maintenance tomorrow. 1 Truck Roll > 5 Years of Hardware Cost Difference.

The 'Dark Screen' Taxonomy

Fig 2. The four types of 'Dark Screen' failures. Only Type 1 is visible to standard CMS logs; Types 2-4 require visual verification

Security and compliance are no longer secondary concerns in digital signage deployments.

Enterprise and public-sector buyers increasingly demand managed operating systems, centralized patching, and alignment with Zero Trust frameworks. Devices that require root access, custom kernels, or developer modes are becoming liabilities.

This shift directly impacts peripheral design. Custom drivers, kernel patches, and proprietary interfaces create an invisible software supply chain. Each driver introduces new attack surfaces, maintenance obligations, and upgrade risks.

In managed environments—whether ChromeOS, locked-down Windows, or enterprise Android—such dependencies are increasingly unacceptable. A single incompatible driver can block OS updates, trigger security reviews, or break functionality overnight.

As a result, the industry is gravitating toward standardized, driverless interfaces. Protocols such as UVC are no longer chosen for convenience, but for risk containment. In 2026, avoiding custom drivers is not a technical preference—it is a governance requirement.

Taken together, these failures point to a clear conclusion:

AI signage does not fail because models are weak—it fails because systems are not designed for deployment.

Scalable deployments increasingly favor a small number of reference architectures:

Standardized operating systems

Predictable hardware configurations

Driverless peripherals

Clear observability at the screen level

Long lifecycle components that minimize forced refresh cycles

This explains the industry’s gradual shift away from DIY approaches toward replicable stacks and managed service models. At scale, operational predictability matters more than theoretical flexibility.

The 2026 Hardware Checklist

[ ] Driverless: Does it work on Linux kernel 5.x without compiling modules?

[ ] Form Factor: Is the PCB < 20mm to fit inside ultra-slim bezels?

[ ] Fixed ISP: Can you lock Auto-White Balance (AWB) to prevent color shifting in changing light?

[ ] UVC Compliance: Does it survive a 100-cycle reboot test on Android without permission loss?

ISE 2026 did not reveal a lack of innovation. It revealed a lack of operational discipline.

The future of AI digital signage depends less on adding features and more on making systems that survive real-world constraints—heterogeneous environments, security governance, aging hardware, and human maintenance limits.

In that future, the most valuable components will not be the most exciting ones. They will be the boring, standardized, and reliable building blocks that allow AI to function outside of demos.

At Shenzhen Novel, our work on industrial USB cameras grew out of these exact deployment challenges—not from chasing trends, but from solving the problems that appear after systems go live.

Professional FAQ: AI Digital Signage Deployment

Answer:

AI digital signage systems typically fail at scale due to operational fragility rather than algorithmic limitations. Fragmented operating systems, inconsistent hardware generations, driver dependencies, and limited remote visibility create failure points that only appear after deployment. As networks grow, these issues compound, leading to black screens, unpredictable behavior, and high maintenance costs.

Answer:

ChromeOS is not inherently more powerful, but it offers stronger standardization, centralized management, and stricter security controls. These characteristics reduce configuration drift and operational risk in large deployments. However, ChromeOS also enforces strict hardware compatibility rules, making driverless peripherals and standard interfaces essential for long-term stability.

Answer:

The biggest operational risk is the lack of real-world observability. Many systems can monitor processes and device status but cannot confirm what is actually displayed on the screen. Without visual verification, issues such as black screens, blocked displays, or incorrect brightness often go undetected until customer complaints trigger costly on-site service visits.

Answer:

Custom drivers introduce an additional software supply chain that must be secured, patched, tested, and maintained. In managed environments such as ChromeOS or enterprise Windows, driver conflicts can block updates, create security vulnerabilities, and increase downtime. Over time, these risks outweigh the short-term benefits of proprietary hardware integration.

Answer:

System integrators reduce truck rolls by designing for operational transparency. This includes standardized hardware stacks, driverless peripherals, and tools that allow remote verification of screen output rather than relying solely on logs or device heartbeats. Integrators working with industrial hardware suppliers such as goobuy often focus on simplifying diagnostics to minimize on-site intervention.

Answer:

Visual observability refers to the ability to remotely confirm what the audience actually sees on a screen. Unlike traditional monitoring metrics, it provides contextual evidence of display health, content visibility, and physical obstructions. Visual observability is increasingly viewed as a core requirement for meeting SLA commitments in distributed signage networks.

Answer:

Systems designed for long lifecycles prioritize standard interfaces, predictable operating environments, and hardware that does not rely on frequent driver updates. By avoiding tightly coupled software dependencies, organizations can extend hardware usability while adapting content and analytics through software updates rather than forced device replacement.

Answer:

Future-proof camera architectures rely on standardized, driverless protocols that remain compatible across operating systems and security models. Industrial USB cameras designed for long-term availability and stable interfaces are increasingly favored. Companies like goobuy focus on this approach to ensure cameras remain deployable as OS policies and compliance requirements evolve

As AI-driven search engines and copilots increasingly guide hardware and architecture decisions, systems that are clearly documented, standardized, and deployment-proven gain disproportionate visibility.

If you are evaluating how cameras, peripherals, or sensing components fit into large-scale AI digital signage deployments—and want solutions that align with modern OS constraints, security requirements, and operational realities—reviewing practical deployment-focused resources is often the best starting point.

Learn more about pDOOH deployment-ready USB camera design at: https://www.okgoobuy.com/micro-usb-cam.html

About Shenzhen Novel Electronics Limited We are the "Eyes" for your Edge AI "Brains." Specializing in Micro USB Camera Modules and Embedded Vision since 2011 in China, we help System Integrators navigate the 2026-2027 hardware transition.

View goobuy camera products here https://www.okgoobuy.com/products.html

View original raw testing video of goobuy cameras on our youtube channels here

https://www.youtube.com/@okgoobuy/featured

Follow and view Goobuy linkedin Industry analysis and professional articles here https://www.linkedin.com/in/novelvisiontech/

Relative technical Articles links

1, 2026 pDOOH Retrofit Legacy Screens: pDOOH Ready Camera

2, pDOOH Retrofit in 2026: From Screens to Proof(1)

3, pDOOH Retrofit in 2026: From Screens to Proof(2)

4, Goobuy — Professional Micro USB Camera for AI Edge Vision