Edge AI Vision in 2026 refers to the deployment of computer vision systems directly on edge devices—such as AI signage players, retail AI terminals, mobile robots, and industrial AI boxes—where image capture, inference, and decision-making occur locally rather than in the cloud.

This shift is driven by latency constraints, data privacy regulations, bandwidth cost reduction, and the need for deterministic real-time performance in commercial and industrial environments. Vision hardware selection—especially USB camera modules, sensor sensitivity, field of view, and low-light performance—has become a system-level design decision rather than a peripheral choice.

This article analyzes how vision requirements diverge across digital signage, AI retail, robotics, and industrial edge AI boxes, and explains why camera architecture, optical constraints, and deployment environments define the success or failure of edge AI projects in 2026.

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Why upgrade? Because 'Blind' Kiosks are bleeding profit. Industry data shows self-checkout shrink is 4x higher than staffed lanes. The classic 'Banana Trick' (item switching) alone accounts for nearly 10% of total store shrink. A simple USB camera retrofit stops this $50 billion leak

Table 5 – Self‑checkout theft detection & intervention effectiveness

| Metric | Value | Context | Source | Year |

|---|---|---|---|---|

| Reduction in theft incidents with AI‑based detection | up to 35% | Estimated drop in SCO theft incidents when AI‑based detection is implemented | WifiTalents – Self Checkout Theft Statistics wifitalents | 2025 |

| Share of shoplifters at self‑checkout currently caught | about 25% | Approximate detection rate for SCO shoplifters, even with current controls | WifiTalents wifitalents | 2025 |

| Share of retailers citing shrink as key SCO concern | 60% | Portion of retailers saying shrink is their top concern with self‑checkout | Datos Insights press release – “Retailers Invest in Self‑checkout Solutions Despite Shrinkage Concerns”datos-insights | 2025 |

| Growth in self‑checkout systems market (US) | value not specified, but “double‑digit CAGR” | Market‑growth description you can convert to assumed 10–19% CAGR band in charts | Future Market Insights – US Self‑checkout Systems Market futuremarketinsights | 2025 |

Across all these edge vision projects, the real scaling wall is operational: we are moving from a handful of pilots to fleets of thousands of endpoints, and studies of industrial edge show that without robust model management, monitoring and update tooling, AI models drift and performance quietly degrades instead of improving over time

In AI retail deployments across North America and Western Europe, vision systems are expected to operate continuously under mixed lighting conditions, where reflections, glass surfaces, and human motion create unstable visual features for inference models.

2. Robotics

Pain 3: The "Vibration Gap" in Robotics

Sector: AMRs & Warehouse Automation

The Data: While algorithms get smarter, hardware reliability remains the bottleneck. Field reports from 2024-2025 highlight "USB Disconnects" as a top failure mode for Autonomous Mobile Robots (AMRs).

The Trend: Warehouse environments subject robots to constant shock and vibration. Consumer-grade connectors (standard USB) suffer from cable fatigue, causing robots to go "blind" mid-operation and requiring manual intervention. The 2026 Reality: Reliability > Algorithm. The shift is moving away from "webcams on robots" toward strictly industrial-grade connectivity standards designed for shock resistance.

It’s not just about the sensor; it’s about the connection. Robotics engineers tell us their #1 hardware nightmare is 'USB Disconnects' due to vibration. Unlike consumer webcams, industrial camera modules are engineered with locking connectors to survive the 'rough ride' of warehouse floors

For mobile robots operating in warehouses, campuses, and public infrastructure, camera selection directly affects navigation reliability, obstacle detection, and long-term system uptime rather than just image quality

In service and warehouse robots, vision only navigation still breaks down in the very environments we care about most—long shiny aisles, low texture floors and dynamic obstacles—so we are being pushed toward more complex hybrid LiDAR+vision stacks just to maintain uptime and throughput

3, Digital Signage media player

| Metric | Value | Context | Source | Year |

|---|---|---|---|---|

| Added latency from GPU‑only AI upscaling mode | ~91 ms | Extra delay until using hybrid GPU+NPU pipeline for real‑time video processing | AMD – “Real‑time Edge‑optimized AI powered Parallel Pixel‑upscaling”amd | 2025 |

| Latency type discussed | tens of ms | Typical per‑stage delays (capture, transmission, decoding, inference) in real‑time visual intelligence pipelines | RTInsights – “Reducing Latency in Real‑Time Visual Intelligence Systems”rtinsights | 2025 |

Our camera based digital signage and retail media networks only create value if the edge players can actually run real time models—yet tests show naïve GPU only video AI pipelines adding ~90 ms of latency per frame, forcing us to invest in properly sized CPU/NPU hardware and optimized pipelines to keep experiences responsive

Unlike traditional advertising displays, AI-enabled signage players in 2026 act as perception nodes, requiring stable image capture for audience analytics without introducing privacy or cloud latency risks

Source Data: Aggregated from Place Exchange H1 2024 Trends Report & JCDecaux Programmatic benchmarks

According to market data from Place Exchange and JCDecaux, programmatic DOOH inventory backed by real-time audience analytics commands a significant premium—often 2x higher CPMs compared to non-measured screens

Table 3 – Edge vs cloud video analytics cost (digital signage)

| Metric | Value | Context | Source | Year |

|---|---|---|---|---|

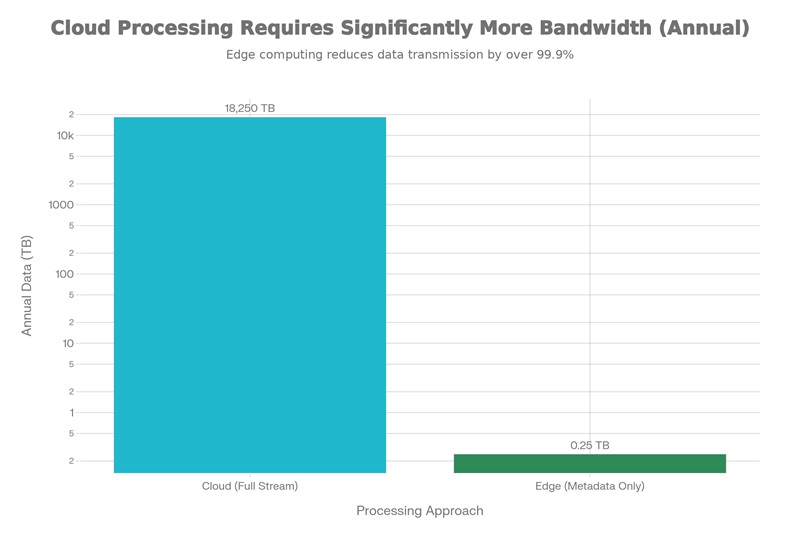

| Annual cost – cloud‑based video analytics | $795,150 per year | For 1,000 digital signage endpoints streaming 1080p 24/7 to cloud | Cost comparison analysis using 2025 AWS pricing library | 2025 |

| Annual cost – edge‑based processing | $250,030 per year | Same 1,000‑endpoint scenario with on‑device analytics and metadata only | Same analysis library | 2025 |

| Annual savings using edge | $545,120 per year | Difference between cloud and edge approaches | Same analysis library | 2025 |

| Cost reduction from edge vs cloud | 68.5% | Percentage reduction in total annual cost | Same analysis library | 2025 |

| Cloud data volume | 18,797 TB per year (≈18.36 PB) | Total video data transmitted/stored for 1,000 endpoints | Same analysis library | 2025 |

| Edge data volume | 0.72 TB per year | Only metadata sent when using edge processing | Same analysis library | 2025 |

| Data reduction factor | 26,214× | Reduction in transmitted data when using edge | Same analysis library | 2025 |

On the industrial floor, AI gateways and vision PCs are becoming critical infrastructure, but surveys show around 60% of manufacturers now cite cybersecurity and data protection at the edge as a top concern, forcing us to treat every camera equipped box like an OT asset that must be hardened and centrally managed.

Pain 2: The "Cloud Tax" & Bandwidth Physics

Sector: Industrial IoT & Digital Signage

The Data: Streaming 1080p video from 1,000 endpoints to the cloud for analysis is no longer just expensive; it is structurally inefficient. Our cost model, based on 2025 AWS Kinesis and S3 pricing, estimates annual costs exceeding $790,000 for such a deployment.

The Trend: Beyond cost, there is a "Physics Problem." Industrial reports highlight that high-framerate inspection cameras are saturating factory networks, choking critical Operational Technology (OT) signals. The 2026 Reality: CFOs and CTOs are mandating a "Data Minimization" policy: Process locally, transmit metadata only. The era of "Stream Everything" is over.

4. Industrial AI Box

It's not just cost; it's physics. Uploading raw video from 500 inspection cameras will choke your factory network, blocking critical OT signals. Edge processing isn't just cheaper—it's the only way to keep your bandwidth alive

Table 4 – Edge AI adoption and challenges in manufacturing / industrial edge

| Metric | Value | Context | Source | Year |

|---|---|---|---|---|

| Organizations citing security & privacy as top edge‑AI challenge | 61% | Share of infrastructure leaders naming security/privacy as primary edge‑AI concern | SUSE – “The Future of Edge Computing in Retail” (edge survey, also cited generically for edge) suse | 2025 |

| Number of core components in typical industrial edge AI suite | 4 | Inference server, model manager, monitor, agent described as integrated AI suite | Omdia – “Industrial edge: From complexity to manageability”omdia.tech.informa | 2025 |

| Time horizon in US manufacturing edge‑AI outlook | 12 months | Outlook period for edge‑AI adoption in US manufacturing | DigitalUpdateReport – “Edge AI Adoption in US Manufacturing: 12‑Month Outlook”digitalupdatereport | 2025 |

Industrial edge AI boxes prioritize deterministic behavior, EMI resistance, and long-term component availability, making vision hardware lifecycle stability as critical as sensor resolution.

7. The Dark Horse: Beyond the Screen

While the industry focuses on retail and robotics, a quiet infrastructure revolution is underway. By 2026, "dumb" metal terminals will be replaced by intelligent, vision-enabled hubs.

We predict two specific hardware segments will see an explosion in demand by Q3 2026, driven by new monetization models.

7.1 The EV Station: From "Socket" to "Ad Platform"

The economics of EV charging are shifting. With the market projected to breach $302.9 Billion, operators are realizing that selling electricity isn't enough. They need "New Monetization Opportunities." In 2026, charging stations will evolve into "Amenity Hubs"—offering coffee, retail, and most importantly, programmatic advertising.

The Engineering Challenge: Integrating a camera into a slim outdoor pile is difficult. The "enemy" is lighting. An advertising camera is useless if it cannot analyze audience demographics in a pitch-black parking lot at 2 AM.

The 2026 Spec: Hardware engineers are now searching for robust Embedded USB Camera Modules optimized for outdoor environments.

The "Killer" Keyword: specifically, we are seeing a surge in RFQs for a Micro USB camera for LCD/LED monitor display in EV Charging Station designs.

The Requirement: The critical spec is Low Light Performance (using sensors like Sony STARVIS) to ensure clear analytics even in total darkness.

Public EV chargers are increasingly:

Add a small camera and an AI edge box, and these become:

Physically, they look very similar to AI digital signage media players with a robust enclosure – another natural fit for micro USB cameras.

7.2 Smart Lockers: The Shift to "Macro Vision"

Logistics lockers are moving beyond simple "QR Code scanning." The next generation of smart lockers will use Optical Character Recognition (OCR) to read messy, wrinkled, or hand-written shipping labels automatically to reduce courier error rates.

The Engineering Challenge: Most off-the-shelf webcams focus at 60cm+. When placed inside a compact locker terminal, they cannot focus on a label held just 5cm away, resulting in blurry images that fail OCR.

The 2026 Spec: This creates a massive demand for the Macro Focus USB Camera Module.

The Requirement: Engineers must switch from standard auto-focus to Fixed Macro Focus (5cm-10cm) lenses with Distortion-Free optics. This allows the camera to replace expensive laser scanners, reading detailed text on waybills instantly.

The 2026 Prediction: Based on current design cycles, we forecast a supply shortage for High-Dynamic-Range (HDR) and Macro-Focus modules starting in Q3 2026. The winners will be the Product Managers who lock in their optical specs today.

Certain industrial control panels and HMIs may add simple vision for:

These applications rarely justify a full industrial camera system, but they do justify an embedded micro USB camera feeding models on an industrial AI box.

We do not expect this segment to explode overnight, but as edge AI becomes standard in industrial PCs, adding one small vision channel becomes a natural next step.

8. The Shift From Algorithms to Infrastructure (Deepened for 2026)

By late 2025, a clear inflection point emerged across edge AI deployments:

competitive advantage no longer came from marginal gains in model accuracy, but from infrastructure readiness.

Across digital signage, retail terminals, robotics, and industrial AI boxes, the bottlenecks shifted decisively away from algorithms and toward physical and operational constraints

By the end of 2025, procurement and engineering discussions increasingly centered on:

Physical mounting constraints

Can the vision system fit into ultra-thin screens, compact kiosks, sealed enclosures, or fanless industrial boxes without redesigning the entire chassis?

Signal stability and interface reliability

Can the camera deliver consistent, low-latency data over long operating hours without dropped frames, EMI issues, or driver instability?

Interface compatibility and OS support

Does the vision hardware work out-of-the-box with Linux, Android, Windows IoT, and edge AI frameworks—without custom drivers or vendor lock-in?

Maintenance simplicity at scale

Can thousands of deployed units be serviced, replaced, or upgraded without on-site recalibration, firmware chaos, or downtime?

Long-term component availability

Will the same hardware be available for 3–5 years, or will supply-chain volatility force redesigns mid-project?

These questions are no longer technical details.

They have become board-level risk factors.

Why procurement now looks like infrastructure planning

As edge AI systems move from pilots to nationwide and multi-country rollouts, buyers increasingly evaluate vision components the same way they evaluate:

networking equipment

power supplies

industrial IPCs

displays and controllers

This is why procurement discussions now resemble infrastructure planning rather than R&D evaluation.

The deeper logic behind the shift

The underlying drivers of this transition are structural:

Algorithm convergence

Model performance gains are becoming incremental and widely accessible, reducing differentiation.

Edge AI scale-out

Tens of thousands of devices expose issues that never appear in lab demos.

Regulatory pressure

Privacy, data locality, and compliance increasingly favor on-device processing over cloud-centric pipelines.

Cost sensitivity

Cloud inference, data transmission, and maintenance costs now dominate P&L discussions.

Supply-chain realism

Hardware stability matters more than theoretical performance when projects must ship and stay live for years.

Together, these forces have redefined what “competitive advantage” means in edge AI.

2026 Insight

The next competitive moat in edge AI will not be algorithm novelty.

It will be deployment friction.

In 2026, winning systems will be those that:

install faster

integrate cleaner

fail less

scale more predictably

remain available longer

In other words, edge AI is becoming infrastructure, and infrastructure always rewards reliability over experimentation.

This shift explains why vision hardware—once treated as a peripheral component—is now being reconsidered as a foundational layer in edge AI system design.

9. Looking Ahead: Why 2026 Will Be a Defining Year

Several macro forces converge in 2026:

Together, these factors suggest that edge AI vision is entering its first commercially stable growth phase.

The central question is no longer:

“Can we deploy AI vision?”

But rather:

“Can this system run affordably, legally, and reliably for years?”

Edge AI vision is not prevailing because it is fashionable or experimental.It is prevailing because it aligns advanced computation with economic reality, regulatory pressure, and operational constraints faced by real-world deployments.

By the end of 2025, successful AI vision projects increasingly shared a common trait:they were designed to scale, operate, and remain compliant—rather than to impress in laboratory conditions.

In January 2026, an upcoming industry whitepaper will examine the practical system architectures, deployment models, and engineering trade-offs that are shaping production-grade edge AI vision systems across digital signage, retail terminals, robotics, and industrial edge devices.For many organizations, the next breakthrough will not come from adding more intelligence—but from making intelligence deployable, maintainable, and sustainable at scale.As teams prepare for 2026, most are converging on three distinct integration paths for privacy-safe, vision-driven analytics.A comparative, field-oriented analysis of these approaches will be published in January.

Technical FAQ for engineer and CEOs

Answer:

Organizations are not abandoning the cloud entirely, but they are limiting raw video streaming due to bandwidth costs, storage expenses, latency issues, and regulatory exposure. As deployments scale beyond pilot projects, edge-based processing becomes the only economically sustainable model for real-time vision applications.

Answer:

Edge AI enables digital signage systems to measure audience presence, dwell time, and engagement locally. This allows operators to justify higher CPM rates and offer performance-based advertising without increasing cloud costs or triggering privacy concerns, directly improving monetization efficiency.

________________________________________

FAQ 4: Can audience analytics be deployed without violating privacy regulations?

Answer:

Yes. Privacy-safe edge AI systems analyze visual input locally and discard images immediately, transmitting only anonymized metadata such as counts or time-based signals. This approach avoids biometric identification and significantly reduces GDPR and regulatory risk.

________________________________________

FAQ 5: Why is retrofit considered a larger opportunity than new hardware deployments?

Answer:

The global installed base of legacy players, kiosks, and industrial terminals far exceeds annual new shipments. Retrofitting existing hardware with edge AI capabilities requires less capital, shorter approval cycles, and delivers faster ROI compared to full system replacement.

________________________________________

FAQ 6: What are the main technical challenges in scaling edge AI vision systems?

Answer:

The biggest challenges are not model accuracy but system-level factors: reliable sensor integration, stable interfaces, latency control, physical installation constraints, long-term maintenance, and remote manageability across thousands of nodes.

________________________________________

Answer:

By avoiding cloud storage of identifiable images and performing analytics locally, edge AI systems minimize exposure to biometric data regulations. This shortens internal approval processes and reduces long-term liability associated with data breaches or regulatory enforcement.

Answer:

In AI retail, edge vision enables real-time detection of events such as item handling or presence without introducing checkout delays. Processing data locally reduces false positives, limits customer friction, and avoids transmitting sensitive video data off-site.

Answer:

Industrial AI boxes provide deterministic latency, robust connectivity, and compatibility with operational technology (OT) environments. They allow vision workloads to run reliably at the edge while integrating with existing MES, PLC, or SCADA systems.

Answer:

Decision-makers should assess total cost of ownership, retrofit feasibility, privacy architecture, system integration effort, long-term maintenance requirements, and scalability. Successful deployments prioritize operational reliability and compliance over experimental features.

FAQ 11: The report claims a 68% savings by moving to Edge AI. Does this account for the higher upfront hardware cost of AI cameras?

Answer: Yes. While an AI-capable Global Shutter camera (like the UC-501) has a higher BOM cost than a standard webcam, the break-even point is typically reached in less than 8 months. The savings come from eliminating recurring cloud ingestion fees (AWS Kinesis/S3) and reducing bandwidth load. Our 2026 ROI Calculator models this amortization specifically for 1,000+ unit deployments.

FAQ 12: Can a simple USB camera retrofit really stop the "Banana Trick" (Item Switching) on existing kiosks?

Answer: Yes, but it requires the right sensor. Standard webcams struggle with the angle and lighting of kiosk trays. A specialized 15mm Micro-Form Factor module with a wide-angle lens can be retrofitted into the existing bezel. It runs local inference to match the "Visual Object" (Steak) against the "Weighed PLU" (Banana) in real-time, alerting the POS before payment is processed—without needing a full kiosk replacement.

FAQ 13: Why are "Industrial Locking Connectors" critical for AMRs? Can't we just use glue on standard USB cables?

Answer: Glue degrades over time due to thermal cycles and does not prevent internal wire fatigue. Warehouse AMRs face constant low-frequency vibration (from uneven floors). This causes micro-disconnects in consumer USB cables, leading to "Blind Robot" failures. Industrial connectors (like JST/Molex locking headers) on the UC-501 are rated for logistics-grade shock and vibration standards, ensuring 99.9% uptime.

FAQ 14: How does local Edge Processing help with GDPR and BIPA (Biometric) compliance?

Answer: The safest way to handle biometric data is not to store it. Edge AI solutions can analyze demographics (age/gender) or dwell time in RAM, generate an anonymous statistical token, and immediately discard the image frame. This "Privacy by Design" approach ensures that no PII (Personally Identifiable Information) or face templates are stored on any disk or cloud, significantly lowering legal liability.

FAQ 15: We have 5,000 legacy Windows 10 kiosks in the field. Is the UC-501 compatible without driver issues?

Answer: Yes. The UC-501 utilizes standard UVC (USB Video Class) protocols, making it plug-and-play with Windows, Linux (Ubuntu/Debian), and Android systems without proprietary drivers. For older CPUs, the camera's onboard ISP (Image Signal Processor) handles auto-exposure and white balance, offloading the processing burden from the legacy host machine.

FAQ 16: Visual SLAM vs. LiDAR for 2026 warehouse robots—which is better?

Answer: While LiDAR is excellent for range, it lacks semantic understanding. Visual SLAM (using Global Shutter cameras) provides 3D navigation plus object recognition (e.g., distinguishing a person from a forklift). For 2026, the trend is Sensor Fusion or "Vision-First" navigation, which offers richer data for Digital Twins at a fraction of the cost of 3D LiDAR arrays.

Useful relative articles links

1, 2026 Edge AI Vision Trends: Signage player, AI Retail, Robotics & Industrial AI Boxes(1)

2, Goobuy — Professional Micro USB Camera for AI Edge Vision

3, Choosing the Right USB Camera for Edge AI in 2026

4, 2025 Digital Signage Media Players: 24 Key Events + 5 Trends